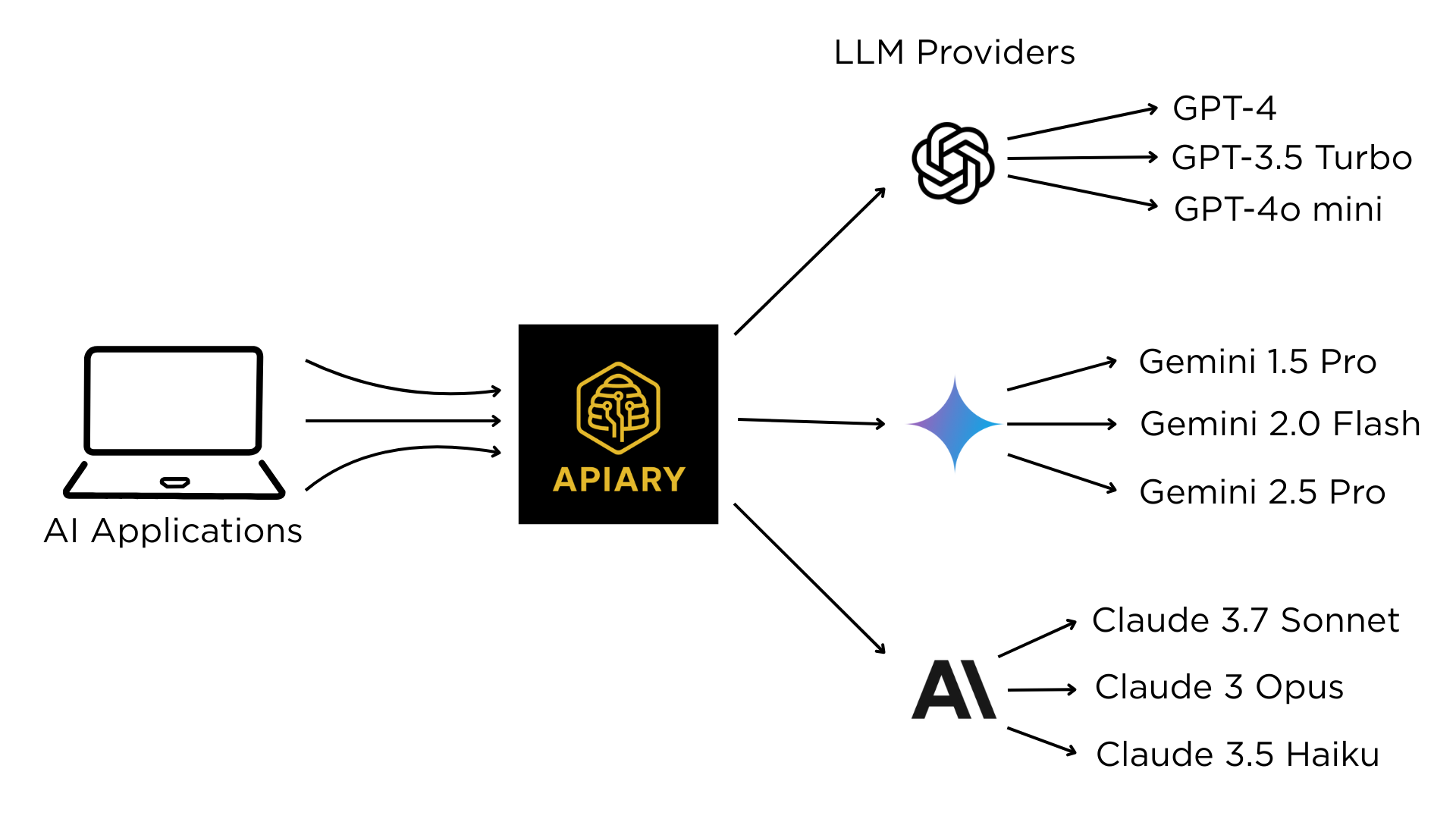

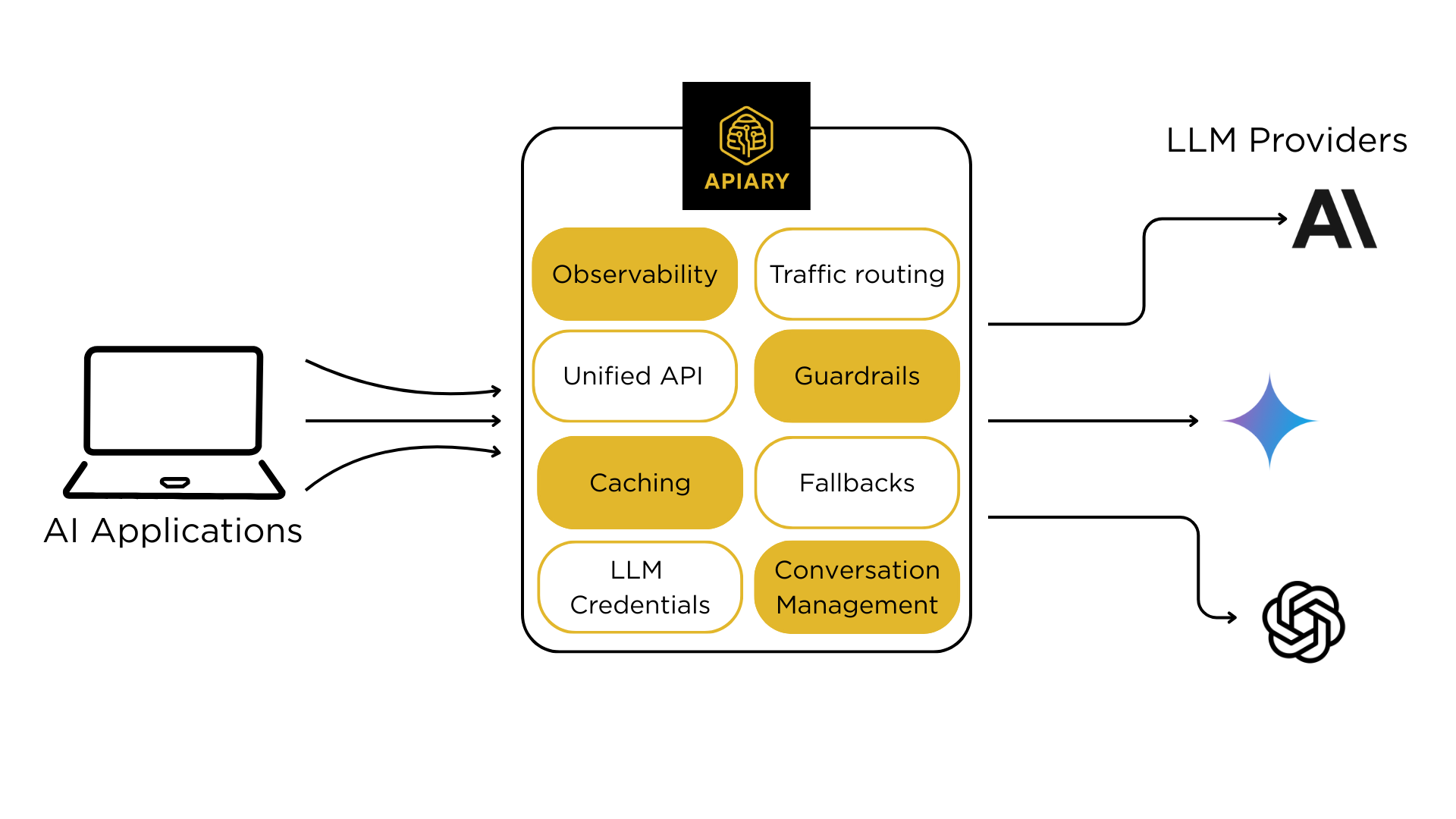

Multiple LLM providers. One API.

Simplify access to multiple large language models with a single, unified API.

Simplify access to multiple large language models with a single, unified API.

Manage common concerns like authentication and traffic routing in one location. Ensure availability with LLM fallbacks. Optimize costs and performance with configurable features like semantic caching and guardrails.

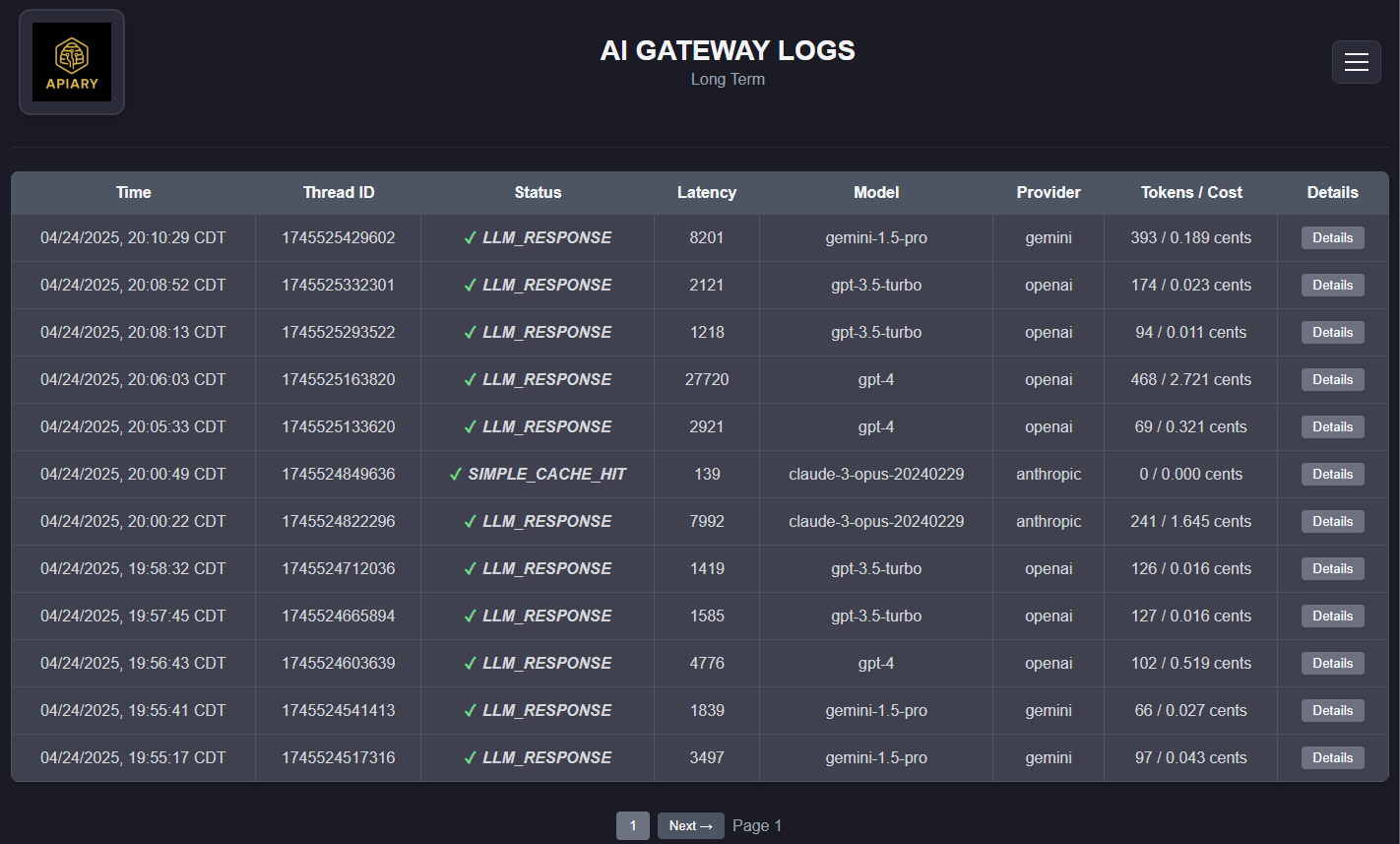

Apiary includes logging and cost tracking for multiple LLM providers through a single developer dashboard.